-

A programmer's guide to BDD

BDD is short for Behavior-driven development. The Wikipedia article on BDD is really long, but as far as I can tell BDD is better summed up as: "When you're writing code, examples are really helpful so you should get as many as you can stomach. If anything is unclear, ask for some more examples."

I don't know if it's strictly in following with BDD, but a lot of the time I ask for examples that aren't like previous examples. That can be pretty enlightening.

-

Finding the name of a Perl sub routine from its reference

While mucking around with Perl's Test::Simple I needed to find out the name of a sub routine from a reference. While there are several answers, finding any useful ones by the usual means was difficult. The one I landed on was using Sub::Identify, which provides the routine sub_name($) that solves the problem nicely.

Here's some example code:

perl -MSub::Identify -e 'sub bar{return "baz"};$foo=\&bar;print Sub::Identify::sub_name($foo)."\n"'This correctly prints "bar".

-

NetBeans's new Python type inferencing

Tor Norbye's series of weekly screenshots of cutting edge NetBeans features is interesting, but the feature displayed in this recent one has made me wonder about the balance between what IDEs can do and what they should do. The feature is fairly simple, adding a type annotation to function/method parameters in python so NetBeans can recognize the expected type and grant semantic auto-complete for the parameters. In my opinion, this steps over the line of what an IDE should do. Here's why I think so.

What an IDE should do (when editing)

When I'm typing, I want the editing environment to enrich my ability to type. If I'm typing plain text, some sort of word-completion is a benefit. If I'm typing code, word-completion is useful, but semantic code completion can be much more useful. I say can, because sometimes the limitations of the IDE impede the usefulness of code completion. When the IDE doesn't know how to do the right thing, I often find myself yanked out the context of the code and either have to rearrange myself to what the IDE has done (in dynamic languages, often expanding every possible completion regardless of type) or consult an external source on what options are available. Both these actions are undesirable, because they take me out of my primary focus (the code). The code completion I've been doing up until this point has also conditioned me. I am conditioned by the IDE to use code completion as part of my writing process. When code completion fails, I have a failure in my writing process. It follows of this that it is desirable that code completion never fails.

When I am writing code, I'm generally writing just one language (or in some cases, such as php, erb templates, django templates, jsp, a markup combined with one language). Therefore, I want my editing environment to support the writing of that language. When I am writing Java, this means aggressive code completion, templating and creation. In fact, templating and code creation is actually more important to me in Java than code completion. Java requires a lot of boilerplate and ceremony, and the more of this the IDE can take off my hands the better. When I'm writing Python, I want the IDE to flag stuff like undeclared variables, missing imports, code style and many of the other things Nordbye has demonstrated over the last weeks. I do not, however, want code completion. Maybe this is because I don't write much code that interfaces with Python libraries, but I prefer word completion. But the primary reason I don't want code completion is that it will fail. I don't want to introduce failures to my writing process.

What an IDE should not do

Simply stated, an IDE should support the language in question. It should not expand the language. The commented type annotations in NetBeans change Python. Changing the language means that the code acquires noise when viewed in other editors. Other editors do not parse anything of value from the commented type annotations.

I also think that adding semantic value to comments is a bad idea. Since comments don't fail when code is updated, there is a very real possibility that a method's parameters can be updated without the type annotations being updated with them, possibly causing NetBeans to code complete for the wrong type. This is a failure, and the result is even worse than the failure above, since the IDE is now helping you write patently incorrect code.

What I'm suggesting

It's bad form to criticize without suggesting an alternative, so here goes. I don't know if this is feasible, and it's almost certainly more work than the type annotations were.

I would like my IDE to support my normal habits when I'm writing Python. What I was taught in my early Python days, was that the REPL is your friend. This is not just because you can test code snippets in it, but because Python is self-documenting. Documenting comments are generally not written with comments, but with docstrings. A great way to get help about something is to run some introspection on it, or use the dir() or help() functions. The dir() function lists all methods available on an object. The help() function provides a formatted and paged listing of a type and its methods.

What I would like is for my IDE to assist me in using these functions. For example, if I'm looking for some documentation on the str class (as Nordbye was doing in his example), I'd like to be able to view the results of help() on some type in a panel in the IDE somewhere. The exact way to input that type's name should be up to better interaction designers than myself, but some sort of dialog that accepts the typename bringing up a panel displaying the help page would be most useful.

The code completion mechanism could then rely on this documentation panel as a 'hint' when type inferencing fails. That said, it should probably be clear from the drop-down that completion is being done from the documentation, so it's clear to the developer why string completion is being done when I'm dealing with something I know not to be a string.

When I tried out the NetBeans nightly build for Python to get an idea of how intrusive the comments were (too much so) and how well the other stuff worked (very well), the only time I needed explicit type annotating failed. I was dealing with a dict filled with lists, and wanted a method for the list that I didn't know what was called off-hand. I was not altogether pleased by this, but the overall feel of doing Python development in NetBeans was quite good. Editing felt snappy and responsive. It was very easy to import my existing project (with a non-standard layout) to NetBeans and both navigating through the project and editing worked very nicely.

-

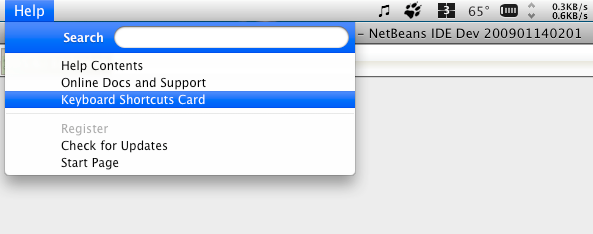

Where to find the NetBeans cheat sheet

Google doesn't return any useful links for this, so here's where to find the NetBeans cheat sheet:

-

ELisp best practices?

I've spent some time looking at Emacs Lisp lately and there's a weird ecosystem going on there. ELisp (and I'm thinking primarily about Emacs configuration here) tends to grow organically, because it's paced by a person's learning of the editor. Every now and then someone needs a new function, and they either search or ask around for something similar. Either it's been implemented before and the code can be copied into their local Emacs configuration or they can write a simple version that is sufficient for initial usage.

The way I tend to grow my Emacs is either piecemeal, as described above, or by reading other people's code. Sharing Emacs configuration code is a long tradition, and there are many webpages dedicated to it (DotEmacs, there are plenty on the Emacs wiki and ELisp is the 11th most used language on GitHub). Reading someone else's Emacs configuration is a great way to get new ideas. Recently I've found djcb's dotemacs (he also runs an interesting blog called emacs-fu) and the emacs starter kit very interesting.

In particular, I noted two things. In djcb's dotemacs, he defines macros for conditional requires, that degenerate gracefully when required libraries are unavailable, instead of erroring. It similarly has a macro when-available, that runs some code if and only if a given function is available. These are used liberally throughout his configuration, making it robust against unexpected environmental differences. When I first saw this I considered it clever (and still do, really) and wondered whether I should include something similar in my Emacs configuration. In the end I decided against it, because I only use Emacs in very similar versions on two machines and version control libraries that are missing from either or both systems. This ensures that I rarely see errors from missing requires or functions.

In emacs-starter-kit the use of ELPA, the Emacs Lisp Package Archive, intrigued me. I'm a big fan of package management, though I tend to be of the 'one package manager to rule them all (per system)' crowd. Using ELPA instead of (re-)versioning all the external code I use seems like a good idea, though I've put it off for a bit due to the number of comments to the effect of 'workaround for bug in ELPA' in the starter kit. I'd also like a web interface to browse available packages and accompanying source so I can check on the status of all the libraries I already use.

Thinking about both these things, gracefully accepting the limitations of environment and package management for dependency resolution, I started to wonder about other best practices for ELisp. While Emacs is a huge framework in and of itself, the incremental improvement pattern for building ELisp doesn't exactly invite to build other frameworks. So I wasn't very surprised when I found that the only unit testing framework is quite new and not generally accepted as the right way to test ELisp (discussion here).

None of the advice or ideas I've found have really addressed the practice of writing ELisp, maybe with the exception of Steve Yegge's save-excursion post. Even people who talk about modularity don't generally mention how to best organize ELisp modularly. This isn't necessarily bad. The lack of clear practices does mean one has to make a lot of judgement calls on what the right thing to do at any given point is, taking into account the one's own requirements and limitations.

-

Upgrading Ubuntu after EOL

I have a box with Ubuntu 7.10 (Feisty Fawn), which EOLed back in October. Now that I have a few days to spare over the holidays I thought I'd get around to upgrading it to something current. Unfortunately when Feisty was EOLed they moved the package repositories. The new mirror is at old-releseases.ubuntu.com.

The error I was getting looked like this:

# do-release-upgrade .... E: Some index files failed to download, they have been ignored, or old ones used instead.To correctly upgrade, I did as follows:

# sed -ie 's#us.archive.ubuntu.com#old-releases.ubuntu.com#' /etc/apt/sources.list # apt-get update && apt-get upgrade -yApt should correctly update all packages to your EOLed release's final version. When that was done, I was ready to upgrade to Gutsy. The upgrade manager the Ubuntu folks have come up with is pretty slick, but doesn't take into account that you might be running an apt mirror that doesn't also serve the version of the distro you're upgrading to. Oops. Getting it sorted isn't that big a deal. I started it.

Then, when prompted for information about my mirrors, I backgrounded do-release-upgrade with C-z and fixed my source.list again, this time to reflect a repository that was invalid for Feisty, but valid for Gutsy:

# do-release-upgrade ... Updating repository information No valid mirror found While scanning your repository information no mirror entry for the upgrade was found.This can happen if you run a internal mirror or if the mirror information is out of date.< Do you want to rewrite your 'sources.list' file anyway? If you choose 'Yes' here it will update all 'feisty' to 'gutsy' entries. If you select 'no' the update will cancel. Continue [yN] [1]+ Stopped do-release-upgrade # sed -if 's#old-releases.ubuntu.com#us.archive.ubuntu.com#' /etc/apt/sources.list # fg yAnd after that it was all smooth sailing.

-

Emacs and Rails

So it seems the Rails community is looking at alternative editors. This post by Daniel Fischer gives a pretty good overview. I have to say I approve. Not that TextMate isn't good. It is. But it's very cool for Emacs (and Vi!) that a community that focusses a lot on sharing knowledge and techniques is taking up these editors.

For me this video on ido-mode was a big win. I'm also a fan of the Emacs in 5 minutes demo, and there are many more here. There are also a couple blog posts going around on experiences with both vi and Emacs, usually comparing them to TextMate. What these three have in common is composability and scriptability. I constantly butt my head against the lack of it in fullblown IDEs. Eclipse, which I spend a bit of time in from day to day, doesn't even have any decent support or plugins for keyboard macros. It's so easy to forget when dealing with editors that recognize ASTs that programming is fundamentally about editing. Sure, the stuff that we can do with refactoring tools is impressive, but if it's taking focus away from thinking about how you can do your own refactorings, it's probably hurting as much as it's helping.

Here's some emacs stuff I use regularly. My dotemacs stuff is available at github if anyone's interested.

- ido-mode - A minor mode that makes many functions autocompleting. My favorite little touch is how it looks at recently opened files when trying to autocomplete filenames. I use this to look up configuration files deep in Java projects quite a bit.

- tramp-mode - Open files over almost any remote (or local) protocol. The linked page has find-alternative-file-with-sudo that opens local files with sudo, which is nice.

- yasnippet - Obligatory snippet minor-mode. I'm not a huge fan of the default binding to tab, so I'll need to find a better one eventually.

- nxml-mode - A superior editing mode for XML and XHTML. I don't even use this properly (yet), and it's still superior to Eclipse's default XML editor.

- Various language modes - There's a fantastic major-mode for Erlang. There's a great one for Scala (superior to anything I've seen in an IDE with one caveat: I can't get maven projects to compile correctly, yet). I haven't tried out js2-mode yet, but I've heard good things. In general people create these for Emacs sooner and better than many IDEs (though some of the NetBeans 7 stuff looks impressive.)

I use Emacs on Ubuntu 8.10, where I use emacs-snapshot-gtk, which is a stable snapshot from CVS. On Mac OS X I use Carbon Emacs, though I'm probably going to move to a build of Emacs.app next time I upgrade. Carbon Emacs is pretty good, but has a couple of weirdisms that I don't like. For example, the zoom button doesn't fill the screen, it just makes the frame 80 columns wide (fixed by max-frame).

While I've taken a couple potshots at IDEs in this post, I'd like to emphasize that while there is a difference in philosophy, and I adhere to the "editor first, code manager second" philosophy, but the IDEs are getting there. The editors didn't just start out awesome at editing, and the IDEs didn't just start out awesome at code managing. Both of them are constantly improving and the choice for the future isn't necessarily clear. All in all, I'd say it's a good time for both of them. More people are diversifying, both between editors and IDEs. This is a good thing too, as it'll drive improvement further.

-

Interesting riposte on git v subversion

Andy Singleton posted a reply to (among others) my rant on Subversion sucking. The thought experiment is pretty interesting. I'm not convinced though.

In general, I see more technological options opening up on many fronts. Version control is just one of these. While I hope people I work with move away from Subversion (so I don't need to deal with, a highly personal preference), I don't think it's going to die any time soon. I do think it's going to be more distributed in the future. Local history in Subversion 1.8 anyone?

-

We are all library producers

Debasish Ghosh has written a counter to Guido Von Rossum's post on Scala. As per usual, Debasish's post is a great read, but it includes one Scala communityism that I find offputting.

Another excellent point that has been raised by David Pollak in one of the comments to Guido's post is that we need to judge complexity of language features separately depending on whether you are a library producer or a library consumer. As a library producer, you need to be aware of many of the aspects of Scala's type system, which may not be that essential as a library consumer.

The fact that developers come in varying levels of competence and interest is not to be conflated with the idea that somehow only some of us create these magical things called "libraries". Unfortunately, there's no divide there. Everyone writes code. Almost everyone reuses some that code. The way to reuse code is to perform some sort of abstraction on a problem. The type of abstraction used depends to an extent on the language and the linguistic background of the developer in question. A Java developer will generally reach for classes and methods, a C developer will reach for pointers, a Scheme developer will reach for functions and so on.

The job of a language is not to divide these developers into categories (those who do understand the type system and those that don't) and from that decree who can create reusable code. The job of a language is to facilitate abstraction to all developers. If developers can't grasp the abstractions provided by the language, they're simply going to ignore them. Working with people who ignore a language's abstractions is not some sort of desirable situation. In other words, Scala developers need to have at least tenuous grasp of the type system. If not, they're going to be negative producers on their project.

There are, if you will, two axes of difficulty a language can be gauged on. One is the essential complexity the language inflicts on you when building abstractions, the other is the rote work it demands you do to build them. The standard Java gripe is that while the essential complexity is fairly low, the amount of rote work is high. The justification for this is that the level of entry is low, but you can build anything you need. Plus you can scale up the number of Java developers infinitely, so rote work gets done easily. Whereas Ruby and Python sacrifice type safety to lower the amount of rote work without increasing the essential complexities of abstractions (I'd even say lowering them), Scala sacrifices essential complexity for more type safety and lower amounts of rote work. I'd also agree that the essential complexity can be traded off. That is, more complicated constructs one place can provide less complicated other places. But this isn't unique to Scala, every language has this feature. It's called abstraction.

Scala is good, but this line about allowing stupid developers to consume the magic mushrooms grown by the smart developers is getting old fast. It's simply not true nor a viable argument in favor of using a language.

-

Don't leave broken windows in your code

This article at the Economist discusses research that shows that the visual state of an area primes people's behavior. If an alley contains graffiti, people are more likely to litter. This effect is called the "broken windows" effect. The original idea was that broken windows would lead to more vandalism and eventually break-ins and more serious crime.

In my experience, something similar applies in codebases. Excusing a bad practice one time makes it easier to excuse another one later. And even worse, the effect is compounding. A little litter doesn't affect people as strongly as a lot.

So don't leave broken windows in your code. Run a tight ship and don't let others do it either. There are several ways to do this. Static analysis tools, test coverage metric requirements and plain old code QA all help. More than that, go even further and always clean up code when you find something messy.

-

Static analysis of code is good

I happen to believe that more static analysis of programs tends to be better. That is, running pre-runtime analysis on a program to find faults is usually beneficial. I'm including automated testing in the definition of "pre-runtime", because no one (no one sane anyway) waits until their program is live to run automated tests.

The one case in which I clearly disagree with the benefits of static analysis is parse-time checking of type safety. Not that parse-time type checking is bad, but that it's not worth the programming language overhead. Reconciling these two beliefs isn't trivial. Most static analysis requires some sort of ceremony to run. The main difference between parse-time type checking and unit tests are that the type checking is probably going to change what language you're writing. But the idea that unit tests don't change your code is bunk. Good testing requires design changes, simply because any code that isn't easy to test is better off being refactored into code that is easy to test. So if all static analysis can potentially change how we write code, why shouldn't we allow pre-runtime type-safety checks to affect our language choice?

It's difficult to say what language is better without resorting to personal preference and problem areas, the latter being much more important in practice. I'd be unwilling to undertake any project requiring more than 100 lines of code without having a automated testing framework available for that language. Thankfully, most languages have a good implementation by now.

So what does parse-time type checking do that other static analyses don't? As mentioned previously, it's done inline. This applies whether or not your static typing is verbose (as with Java) or terse (as with Haskell). The inlining creates noise. Not all static type information is noise, but much of it is. The little documenting effect gained from it is not offset by the added overhead. The only caveat to that sentence is that I haven't used enough terse static typing languages to comment with any authority on them. Like with everything else, I might be wrong, but in this case it'll take much less to convince me that I am.

In addition to inlining the static analysis, parse-time type chkcing is tightly coupled to the code. This is more important than the inlining in my mind. There is a loose coupling between testing frameworks and testable code. If a framework is found to be lacking, it can be exchanged for another one without rewriting the entire application. The same goes for other lint-like analysis tools. They live outside the application, so can be used interchangably and stacked. With language type systems you're stuck with whatever your language gives, including all its warts and annoyances.

So that's why I was glad to see this round-up of the state of static analysis tools for Ruby on InfoQ. While I quite like Ruby's type system, along with its lack of type annotations and parse-time checking, I'm much more interested in reading about what static analysis is available for it than whether Java's typing is superior to it or vice versa.

-

Bruce Eckel announces build.py

Here was me complaining about the lack of good build tools for Python and in a wonderful synchronicity Bruce Eckel's gone and made a new one. The post is part of a longer series on Python decorators, which is worth reading in its own right.

Aside from the unfortunate name (can we hope it gets changed to something more googlable?), the tool looks interesting. The basic idea is to use decorators to define rules and plain Python functions to fulfill them. It looks based on the older style build tools (like Make, Ant and Rake) where one uses recipes to build rather than inferring targets from project structures and compiler configurations (like Maven and Buildr). This isn't necessarily bad, as it gives more finegrained control of what happens as a part of a build.

The key feature that build.py brings from Rake is that the build tool allows you to use a fully featured programming language to define how tasks are executed. This is important for allowing users to create tasks that aren't predicted by plugin makers for the original build tool.

This is still just the first release, and it should be more fleshed out as he finishes the Python book he's using build.py for. I look forward to seeing future developments and adoption.

(Incidentally, someone mentioned Vellum in the comments on the post. It's probably worth looking into as well.)

-

Scala needs its own ecosystem

In this post on Graceless Failures, John Kalucki points out that Scala's RichString and Java's String are completely uncomparable. Neither the == operator nor the equals method return correct equality when comparing RichInt with Integer either. Kalucki's conclusion is to avoid RichStrings when possible, and coerce any back to String as soon as possible. I've bumped into this sort of thing a bit too often when writing Scala, and find it frustrating. The extensions to basic classes done in Scala work against the powerful typing system in my opinion, and the result is impedence rather than clarity.

At the time of this writing the only reply is from Martin Odersky, stating that this problem is going to be solved in version 2.8.0. I find it interesting that he views this as a language level problem. To a degree, it obviously is. If the language did something that made Java primitive boxing classes and Scala Rich primitives directly comparable, the problem would be solved.

This neglects the correct tool for the job, something that Scala design tends to do because of its focus on language level solutions. To me, the reliance on Java types implies a lack of appropriate Scala libraries and Scala library wrappers. One of the advantages of Scala is living in the Java ecosystem, but since Scala has its own idiomatic styles, we're generally better off wrapping Java libraries in Scala code so that they appear to the user as Scala styled libraries. This might seem like a waste of time and effort, but the upshot is worth it. The client code becomes cleaner, more Scala-like and easier to read since it doesn't expend noise on working around Java/Scala integration issues.

However, as Martin Odersky's comment implies, the Scala research team intends to continue playing whack-a-mole with language design to make Java and Scala completely interoperable (or something). This is natural, solving these problems at a language level is much more interesting than writing libraries and wrapper APIs. But that doesn't mean it's more useful. And while the Rich primitives are one example of such a problem, it's far from the only one. The Scala collections library is horrendously incompatible with the Java collections, and with good reason. I would never want an implicit conversion to change the semantics of my underlying types! RichStrings and Strings may be semantically alike, but a Scala immutable Set and a Java Set are not. (Yes, I could make the Java Set immutable, but I've yet to encounter the Java library that returns immutable collections.)

If Scala is to gain more mainstream adoption, development on libraries and frameworks needs to be the next big push, not further language improvements. This is, I guess, the disadvantage of a research institution driving development. We can rely on the EPFL continuing to work on and maintain Scala, but unlike a business value oriented company like Sun, we can't rely on them taking on tasks without research value. This isn't a completely bleak situation though, since this is open sourced software, the Scala community can take action and make the necessary push. Scala needs its own ecosystem. In the long run we just can't rely on the Java ecosystem, because there's too much cognitive dissonance going on.

-

Subversion sucks, get over it

The defacto standard for open source version control systems has been Subversion for the last several years. While CVS is still in use some places, Subversion is miles ahead. While Subversion has served many people well, it has some failings that make it inappropriate for several project classes. The most important of these are open source projects. This post is going to look at why Subversion sucks for open source projects. I'll look at how these arguments also apply to internal business source code management in a future post.

The primary problem with Subversion is the centralized repository. This manifests itself in several ways. Firstly, you must have operations level access to create a new project repository. Secondly, you must have commit access to touch the history of a project. Thirdly, developers are dependent on the project infrastructure to contribute. There are probably more, but today I'll talk about these.

Creating new Subversion project repositories

Creating a new Subversion repository requires access to the svn-admin command on the box running a project's subversion repositories. This means access (possibly indirect) to a shell account. This raises the bar quite high to be able to create new repositories. This might not seem like a big deal. There's even an ugly hack pattern to work around it. Instead of creating new repositories, organizations put everything in the same Subversion repository. An example of this anti-pattern can be seen in the ASF Subversion repository. This is plain bad design. Navigating through these massive repositories is a pain, dealing with commit access becomes a much more vast security issue and the structure of the trunk/tags/branches pattern is broken.

Touching project history

Touching project history might seem like a holy right that should be reserved vetted people, but this is wrong. Users, not project leads, are the final deciders of code value. Political differences in a project should not impact what code is finally distributed. Maintaining patches out of tree violates the fundamental premise of source code management systems; That source code management should be automated, and not done by hand. Source code management systems that encourage out of tree maintainers to abandon source code management are therefore very problematic.

The other assumption is that an official project contributor is always more qualified than a non-contributor has been shown to be false several times. In fact, it's a central premise in the free software movement, the open source community's Right To Fork and the basis of any free market paradigm. Relying on a source code management system that has a centrally controlled access list therefore runs fundamentally counter to ideals that contribute to software quality. This doesn't imply that Subversion leads to worse software, or that it isn't reconcilable to these ideals through clever workarounds, but the dissonance is there and needs to be addressed.

Dependence on infrastructure

The third disadvantage of a central repository is that the lack of local history means one relies on infrastructure availability for source code management. There are primarily two situations where this is important: when the infrastructures fail or when they are unavailable. Infrastructure failure can happen if a server goes down, if a local internet connection fails or a host of other events that affect access to the central repository. Being able to continue to perform source code management under these conditions is important, because infrastructure failure will happen. For open source projects this is important because time is the most valuable asset a developer can contribute.

Other than infrastructure failure, developers are often able to code in places where infrastructure simply isn't available. Internet access is growing more and more ubiquitous, but there are still places to code that don't have access. Whether it's on an airplane, train, in a car or at a cafe without wifi, there are times when project infrastructure simply isn't available and as previously mentioned, time is the most valuable asset of an open source project.

The alternative: Distributed source code management

My distributed source code management system of choice is Git, but that doesn't mean it's right for you. The popular choices these days are Git, Mercurial and Bazaar. There are others, with tradeoffs of their own.

While distributed source code management systems don't solve how to create central project repositories, they make repository creation trivial. This is a big deal. It means that you can start an experimental project with full source code management without polluting the namespace of central repository. Instead of using the stupid One Big Repository anti-pattern, repositories are cheap things that can be created and destroyed on demand. Some work must be done to make central repository hosting easier, which has given rise to services like GitHub, BitBucket (Mercurial) and Launchpad (Bazaar). These are great ways to trivially host open source projects. Since they're offered as free services to open source projects, the need to maintain any repository oriented infrastructure simply melts away.

The way distributed source code management systems deal with commit access is ingenius. Since anyone can create history, but a project lead still owns their repository, the project lead can pick and choose history elements rather than digging through patchesets. Instead of sending a patch over email, someone can maintain a fully revisioned repository and send individual commits. This reduces the load for both contributor and project lead, as well as supporting the old commit access structure.

Distributed version control systems give people the ability to maintain a full project history along with patchsets out of tree as the default mode of operation. The issue of touching history simply goes away.

Since these distributed systems give full repository access locally, the dependence on infrastructure falls away, allowing people to continue to work during infrastructure failures or in areas without access to infrastructure and sync their changes back when they finally become available again.

There are other advantages of these systems over Subversion, but these are the ones related to the core assumption of centrally hosted revision control versus locally hosted revision systems.

The business end of things

So far the assumption has focused on open source projects, but almost all these points apply in some fashion to the business case as well. The cases are more varied and not necessarily as clear, but they are all there. I'll look at these issues in a future post.

-

Static vs dynamic still isn't about typing

Tony Morris claims that Java and Ruby don't generalize to static and dynamic typing systems. He's right, but it doesn't really matter. What matters is that in the context of the discussion, we're differentating between traditional system development languages (C++, Java and C#) and newer system development languages (Python, Ruby). There are other key differences between these languages, but the easiest to identify is that the former are type-checked at parse time and the latter aren't.

For some reason, when I start talking about Python or Ruby to people who have written a lot of the traditional system development languanges, it's the typing that trips them up. They ask how to these languages deal with things like maintainability or IDE support (for refactoring). So people who speak warmly of Python and Ruby didn't choose to make this about static or dynamic type systems, but that's the position we find ourselves defending.

Talking about OCaml, ML, Haskell or Scala doesn't change the fact that we're presenting alternatives to Java and C#. Yes, it's possible to have terse parse time type-checking. That's great! If only Java and C# people who get hung up on type checking knew about these languages, right?

Incidentally, Tony Morris linked to Chris Smith's excellent article on typing. I've pretty much violated all the principles Smith presents, but as long as you substitute 'static typing' with 'whatever Java does' and 'dynamic typing' with 'whatever Python or Ruby' does in my writing, it should be pretty clear. Sure, there are alternatives, but they're not present in the context of this discussion. When they are, I'll have to revise what I say. Until then, I'll keep using Java and 'statically typed language' interchangably.

-

Static vs dynamic isn't about typing

In this post about maintenance of applications written in dynamic languages Ola Bini stirs up the whole static vs dynamic debate (again). It's a post well worth reading, especially the comments. One thing Bini points out is that in the context of this discussion, normally, statically typed language is a synonym for Java (and possibly C#), and dynamically typed means Python and Ruby.

This really is a key observation. If you say static typing is too verbose, anyone who's familiar with OCaml, Haskell or Scala disagrees. But the fact is that our current selection of useful statically typed languages are verbose. C# isn't quite as bad as Java, but Java is terrible.

The question then becomes whether Ruby or Python are less maintainable than Java. In my experience, the answer is 'that really depends on your project'. Maintainability isn't a language feature. No matter what language someone is writing they can create code that is difficult to maintain. The qualities of maintainable code is different in different style languages. A coding style that may be easier to deal with down the line with one language can be more difficult in another language.

In my experience tests are more far important than static type checking when doing refactorings. And personally, I find Java to be worse for reading code than Ruby or Python. People easily become thoughtless code generators when dealing with Java. Just keep hitting ctrl+space until it compiles, right? Meanwhile, languages like Ruby and Python make you focus on what the code actually does when it runs. Neither of these statements are absolutes and they're both limited by my experience, but I have seen both sides of things and my preference is clear.

-

Using Python setuptools on the mac

Python's standard tool for package management is setuptools. The version of setuptools bundled with Mac OS X Leopard is 0.6c7. Unfortunately, setuptools is not self-upgrading, in that it won't replace the easy_install script in /usr/bin, and there's no official .dmg/.pkg to upgrade it. This is important because the easy_install script that's used to install new packages has a hardcoded version of setuptools in it, that it reads from the Python libraries bundled with Leopard.

The hardcoded version string in easy_install became a problem when I tried to install a package that relied on a newer version of setuptools:

$ sudo easy_install -U py Searching for py Reading http://pypi.python.org/simple/py/ Reading http://pylib.org Reading http://codespeak.net/py/0.9.2/download.html Reading http://codespeak.net/py Reading http://pypi.python.org/simple/py/XXX Best match: py 0.9.2 Downloading http://pypi.python.org/packages/source/p/py/py-0.9.2.zip#md5=8447b2ba4c7b4062fcd08aab3377f040 Processing py-0.9.2.zip Running py-0.9.2/setup.py -q bdist_egg --dist-dir /tmp/easy_install-PWyaOs/py-0.9.2/egg-dist-tmp-qz0KLA The required version of setuptools (>=0.6c8) is not available, and can't be installed while this script is running. Please install a more recent version first, using 'easy_install -U setuptools'. (Currently using setuptools 0.6c7 (/System/Library/Frameworks/Python.framework/Versions/2.5/Extras/lib/python)) error: Setup script exited with 2Installing a newer version of setuptools didn't actually help, since easy_install doesn't get touched by this. There are two (sensible solutions) to this. Either edit /usr/bin/easy_install to reflect the newer version of the setuptools package, or use the easy_install module from python rather than the executable. The latter is preferable since it doesn't involve manually changing stuff in /usr/bin, which is just plain wrong.

So this is how to correctly install packages that rely on a version of setuptools newer than .6c7 on a Mac:

$ sudo python -m easy_install py Searching for py Best match: py 0.9.2 Processing py-0.9.2-py2.5-macosx-10.5-i386.egg Adding py 0.9.2 to easy-install.pth file Installing py.cleanup script to /usr/local/bin Installing py.lookup script to /usr/local/bin Installing py.countloc script to /usr/local/bin Installing py.rest script to /usr/local/bin Installing py.test script to /usr/local/bin Using /Library/Python/2.5/site-packages/py-0.9.2-py2.5-macosx-10.5-i386.egg Processing dependencies for py Finished processing dependencies for pyThis works because python searches sys.path, and the /Library/Python site packages are placed before the bundled packages.

The state of easy_install isn't that great. There are basically three alternatives to installing python packages. One is to use the OS package manager, which works on Linux distros like Debian/Ubuntu, where just about everything is ported to a .deb and put in the apt repositories. Unfortunately, macports doen't have many python packages. The other is to use easy_install, warts and all. The third is to download source distros and use distutils to install them (using python setup.py install), which has a very nice retro feel to it. Fortunately, help does seem to be on the way.

-

Project structure and unit testing with Python

I've picked up Python again recently (dangerous, I know), and solved a couple euler problems to get back in the feel of things. Being back to Python is a little bit like flying, but I have noticed one problem. There isn't really a good build and distribution tool for it.

For each language I use to solve euler problems, I've set up a project with some sort of build tool that compiles the non-interpreted languages and runs unit tests that check the outputs for correctness. When I started solve problems in Python I couldn't really find a good guide to setting up projects, both for filesystem and build tools. I realized that all the Python I'd done previously had either been self-contained scripts or structured in some sort of ad hoc fashion.

This post by Jp Calderone has some good guidelines for filesystem structure. I like that he specifies to make the application usable from the project directory, while still making it installable. The whole setup.py thing is based on distutils, a set of packages for making a python library/application installable. Distutils has its set of problems, but is generally pretty good.

Some further investigation uncovered py.test, part of the py library. It's appealing for several reasons. First of all, it's very non-intrusive. Tests live in any module, and are named test_something.py or something_test.py. In each of these source files, any function or method that starts with test_ is run. I put all my tests in a submodule of my source module named test and created a test_ file corresponding to each of them, with a test_ function for each of my euler solutions. I could have put them in a module of their own, to keep the tests entirely separate from the solutions module, but I preferred to keep the test module namespaced.

The second reason I like py.test is the py.test commandline runner. Executing py.test at the top level of any project (in fact, any directory) will send it recursing through subdirectories looking for candidates for testing. The simplicity (compared to, for example, setting up JUnit testing with Maven2) is very satisfying. And while it doesn't integrate directly with distutils, being a simple, unparameterized commandline program means I could easily use it in a script for preparing releases. There's also the buildutils project, which extends distutils with among other things, py.test integration.

While neither distutils or py.test present 360 degree solutions to packaging and testing, they have the inherently pleasant feel of many python tools and libraries in that they make dealing with their target problems very easy. These kinds of tools and libraries are part of the many things that make Python a lot like flying. Being able to write executable pseudo-code is another.

-

Preload application classes on rails 2.1

I've been playing around with deploying JRuby on Rails onto an application server a bit over the last few weeks, and one of the constant annoyances is that the first request takes a goodly amount of time to complete due to Rails not preloading the application classes. With a couple hundred ActiveRecords that need their metadata read from the DB, this can take a while.

There are a couple of solutions described for preloading applications when deploying on Phusion/Passenger, but there's nothing really for JRuby and application servers. However, it turns out that this behavior is being introduced as default in 2.2, the next version of Rails. The specific commit that introduces it is 3bd34b6. While it doesn't apply cleanly to 2.1, it's pretty easy to introduce. Since we freeze rails into our application, this solution worked quite well.

The upshot is that loading the application takes a little longer, but the first request goes down from taking 30-40 seconds on JBoss on my development box to a much snappier 3-4 seconds. This is particularly a benefit when a restarted server isn't access immediately, but only when something important needs to be done a few hours later.

-

Use videos to deliver bug reports

Giles Bowkett found a bug in GitHub, and documented it with a video. This got me thinking about embedding videos in JIRA (or your favorite issue tracker) on bug reports. I don't know if there's a way to do this at the moment, and it'd certainly require a bit of infrastructure to support. But think of the possibilities. Instead of writing out what steps are necessary to recreate a bug, testers can upload a video capture...

Then again, videos still aren't greppable.

-

"Library" oriented programming

Apparently Java is a library oriented programming language because code is packaged as a... library. This makes me wonder what distinguishes it from C. I used to think it was this whole classes and objects thing, but I guess I was wrong. Maybe Java should be called Garbage Collection oriented programming.

-

Jonas Bonér on real-world Scala

Jonas Bonér has posted an introduction to a series about how his company has used Scala for their product. I'm looking forward to reading the series. Worth noting is that he said his team picked up Scala quite quickly.

I'm very much looking forward to reading about the best practices and design choices they made. I'd also like to hear about how they dealt with their Java stack. Specifically, whether they put anything between the Java frameworks or called them directly from their Scala application.

-

Use git tags

I'm quite fond of Git. The home of Git projects on the web is GitHub. A lot of good projects, mostly Ruby and JavaScript related, but also others. It's a popular home for dotfiles as well.

Unfortunately, the practice of tagging releases isn't as common as it should be. Tags are pretty much just aliases for commits, though they can be annotated and even GPG signed (so, for example, no one can fake a Linux kernel tag by Linus). Part of that may be that Git doesn't push tags by default.

But pushing a tag is easy, just add the --tags parameter to the push and it'll push all your tags.

git push --tags origin masterOr, if you only want to push a single tag, you can push the tag directly.

git push origin mynewtagUnlike Subversion tags, these won't create whole new copies of the repository that can accidentally be committed to.

Tags are very useful when dealing with different releases of a project and you find yourself wondering what code was like in a previous version or a version past what you're currently using. Being able to browse the source directly on a site like GitHub is very valuable, but having to look up the what commit has a comment like "bumping version" is annoying and time consuming. Not to mention that it's more difficult to trust any old comment.

So please tag your releases. It doesn't take a lot of effort and makes life easier for people using your project.

-

Scala/JRuby interoperability

This interesting post by Daniel Spiewak covers a bit of the current state of Scala/JRuby interoperability and suggests a couple ways to improve it. I think interoperability between JVM languages that aren't Java are the next biggest improvement that should be focused on.

Groovy, JRuby, Scala and the others are generally good languages with good (or at least decent) interoperability with Java, but things get hairy when the various hacks start getting exposed to eachother. This is especially visible in the first example Spiewak shows, on calling the Scala + operator from JRuby.

Hopefully we'll continue to see work being on this field. I'd love to see the same thing done for calling Scala from Groovy.

-

Why Scala?

This thread on the scala-debate mailing list (originally from scala-user) should prove an interesting discussion. It basically covers the two key elements for language adoption in the Java mindshare space: Tool support and stable backing.

Erik Engbrecht makes the good point that tool support is coming along and the people working on Scala are paid to do so.

The tool support is definitely still in active development. There's a general feature race going on between the big three IDEs (Eclipse, NetBeans and IDEA) to support as many languages as possible. With all it's static analysis, Scala has a lot of potential for IDE support. The key, however, is going to be getting past Java's support. All the extra overhead static typing and analysis brings to the table requires the IDE to handle a lot of things developers can otherwise ignore with dynamic languages. For Scala to compete with Java, it's going to need to equal Java on the stuff that's similar, and provide a better view for developers on all the implicit stuff.

I don't think a bunch of researchers working on the language is quite as solid an endorsement as corporate investment, but that doesn't mean it's not worth a lot. For a lot of people it's going to stand up better as an argument than "the BDFL will not abandon this language, because it's his baby."

-

The future of footwear

Gas powered boots. These are the boots of the future.

Youtube has this, more, and a speed test.

I'm not sure they're the same ones, but they seem a bit unwieldy.